Cache Invalidation Techniques

TTLs, Write-Through, CDC, and Other Methods Explained with Pitfalls

In the last post, we saw WHY cache invalidation is considered “one of the most difficult things in software engineering”.

If it is so damn difficult, how do we do it properly? Well, that’s what we will be looking at in this blog.

We will look at different techniques used for caching that leads to better invalidation according to different usecases, along with their pitfalls.

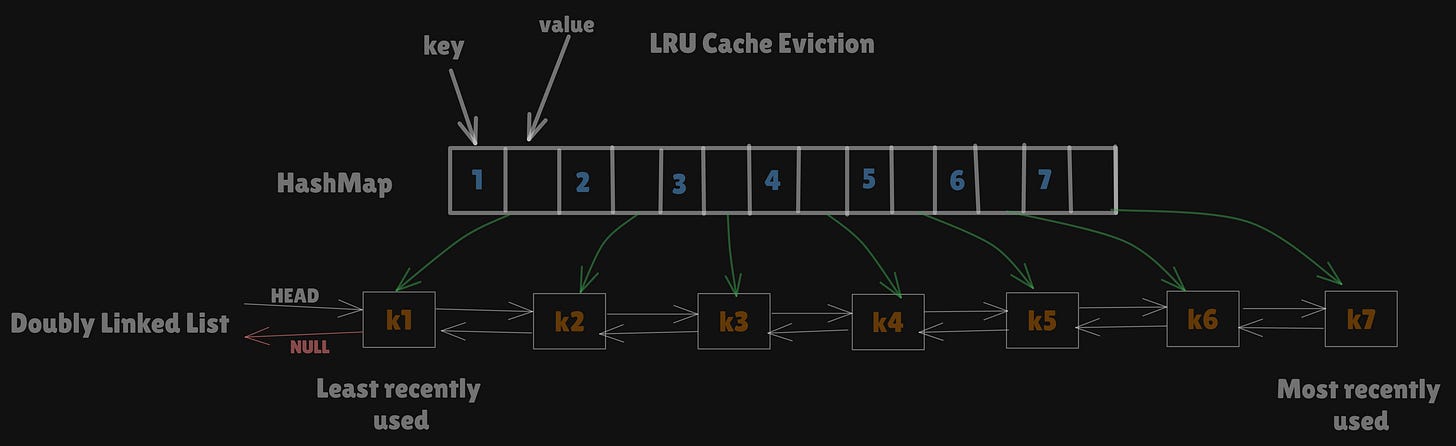

Least Recently Used (LRU)

How it works:

Tracks the last time each key was accessed. Evicts the one not been used for the longest time.

Pros:

Good for workloads with strong temporal locality (recent data is reused).

Widely used in systems like OS page caches, web browsers.

Cons:

Requires a linked list or tree for ordering + a hash map for O(1) access.

It can be expensive for very large caches.

Least Frequently Used (LFU)

How it works:

Tracks how many times each key has been accessed. Evicts the least frequently accessed.

Pros:

Ideal when we have "hot keys" that are consistently accessed over time.

Stable under long-tailed distributions.

Cons:

Needs frequency counters.

Susceptible to cache pollution (early high frequency keeps cold keys forever).

Harder to implement efficiently with aging (exponential decay, so as not to evict everything at once)

You can read more about the LFU implementation here.

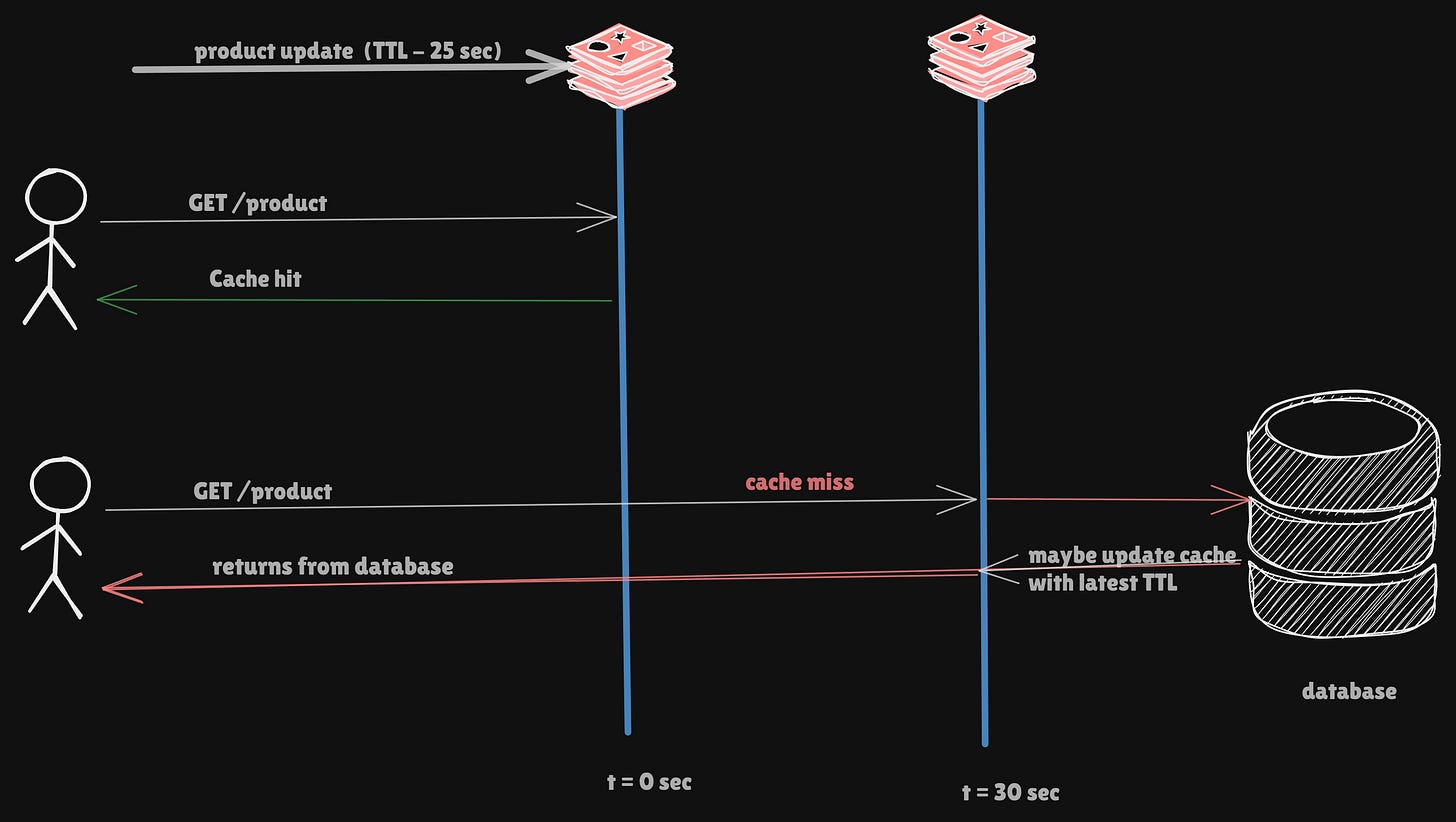

Time To Live (TTL)/Expiry based

Implementation:

Every cache entry has a

timestamp+TTL duration.On

read, the cache checks ifcurrentTime > timestamp + TTL; if yes, it's a miss.TTL can be static (maybe 5 mins) or adaptive (based on the data).

Edge Cases:

Race condition: client reads stale value right before expiry.

Best practice:

Using short TTL + background refresh if freshness is more critical.

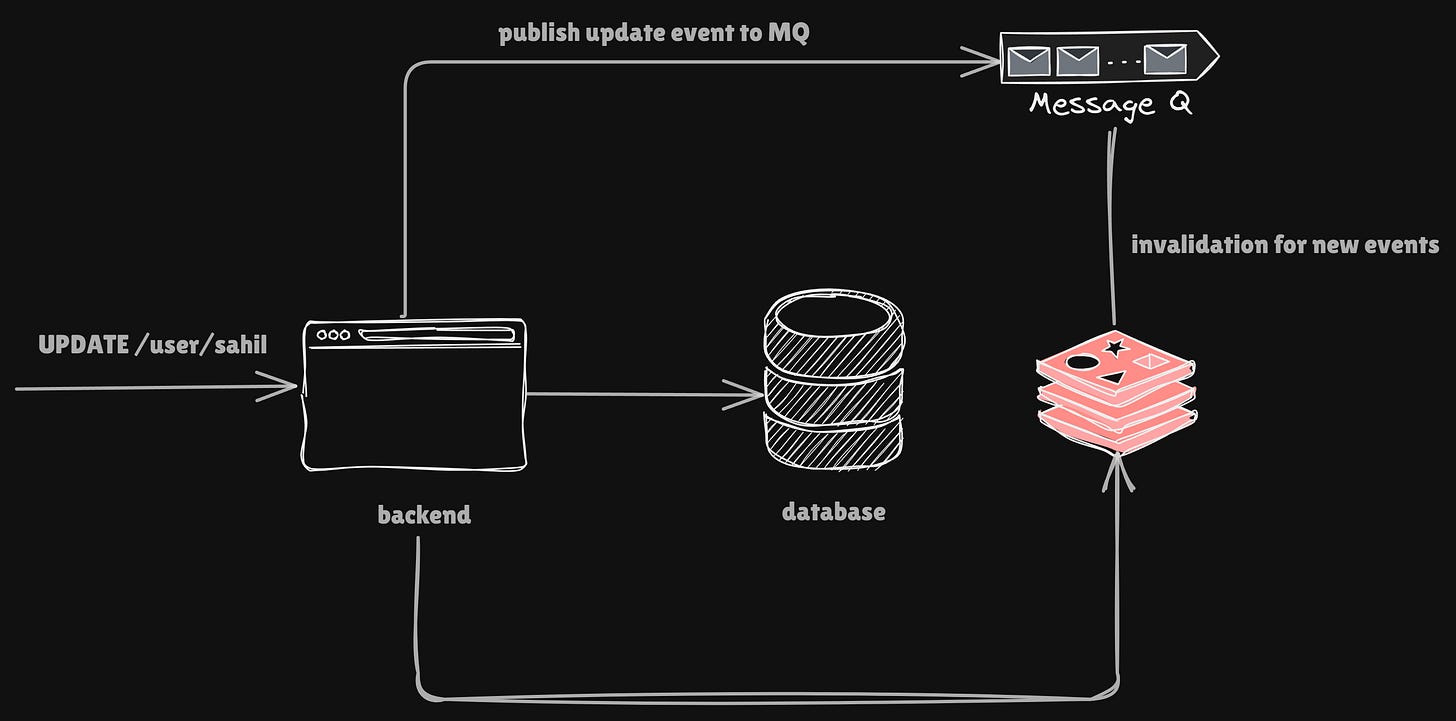

Event-Driven Invalidation

Manual Invalidation:

Example:

DELETE /cache/user42Requires mapping between backend keys and cache keys.

Write-Triggered Invalidation:

Typical Flow

db.Update(userID, newData)

cache.Delete("user:"+userID)With a pub-sub system:

On write: publish an event like

UserUpdated(userID)Cache listens and invalidates accordingly

Challenges:

Hard to scale if multiple services update the same dataset.

Bugs in invalidation logic lead to data corruption

Latency in pub-sub can temporarily serve stale data

Best Practice:

Centralize invalidation logic in a data access layer

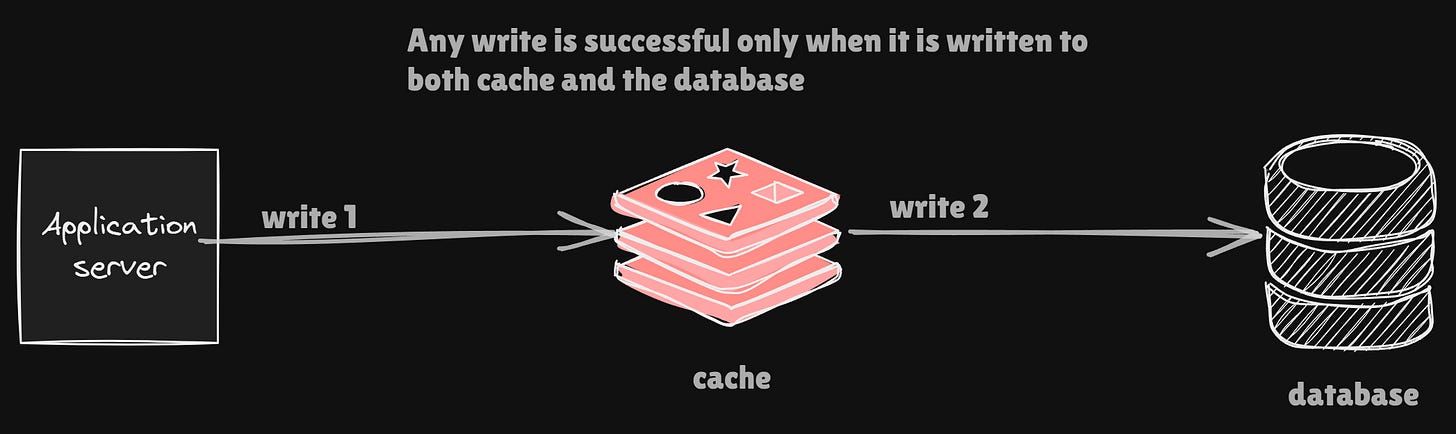

Write-Through Caching

Write path:

func updateUser(userID string, data User) {

cache.Set("user:"+userID, data)

db.Save(userID, data)

}Pros:

Always cache-consistent on writes.

Cons:

Increased write latency (write hits DB + cache).

Needs failure handling: if DB succeeds but cache write fails, leading to inconsistency.

Best practice:

Use distributed transactions or retry queues.

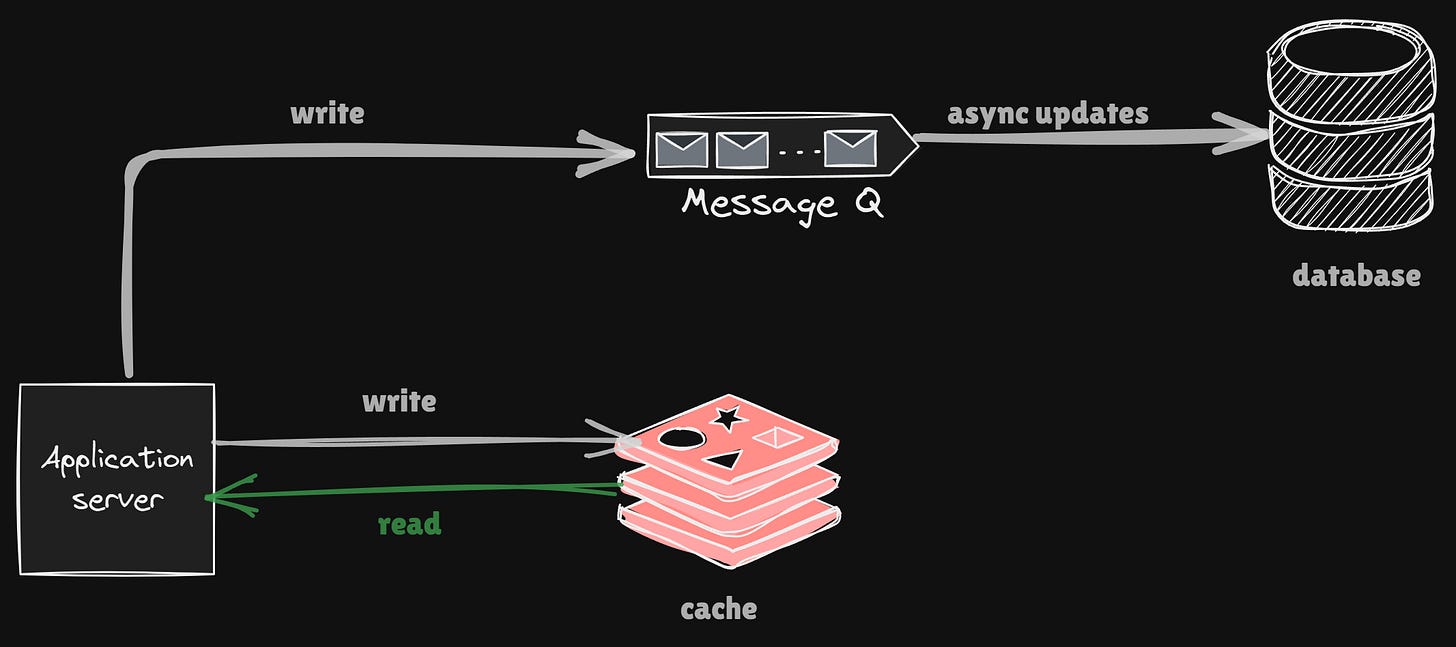

Write-Back/Write-Behind Caching

Mechanism:

cache.Setwrites to the in-memory buffer.A background process flushes to the DB asynchronously.

Pros:

Excellent write performance.

Cons:

Cache crash = lost writes unless write-ahead logs are used.

Other readers querying the DB will get stale data.

Best practice:

Message queues (Kafka, RabbitMQ) to persist writes, or disk-backed write buffers.

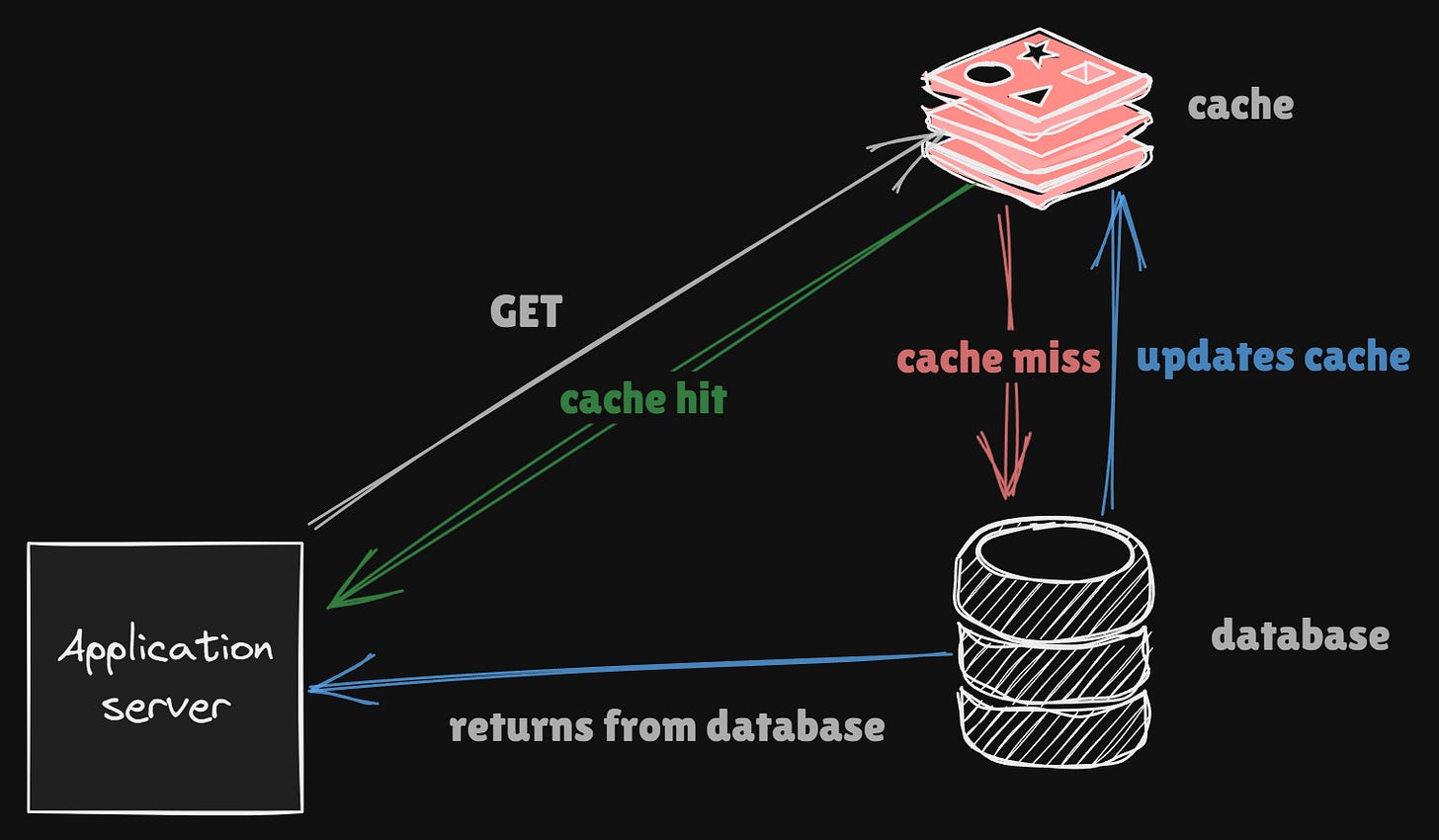

Cache-Aside (Lazy Loading)

Pattern:

val, found := cache.Get(key)

if !found {

val = db.Query(key)

cache.Set(key, val)

}

return valWrite: Update DB, then optionally delete cache key.

Invalidation:

Manual deletion (

cache.Delete(key)) or TTL.If deletion fails, it leads to stale data being served.

Best practice:

Use locks or "singleflight" deduplication to prevent a thundering herd.

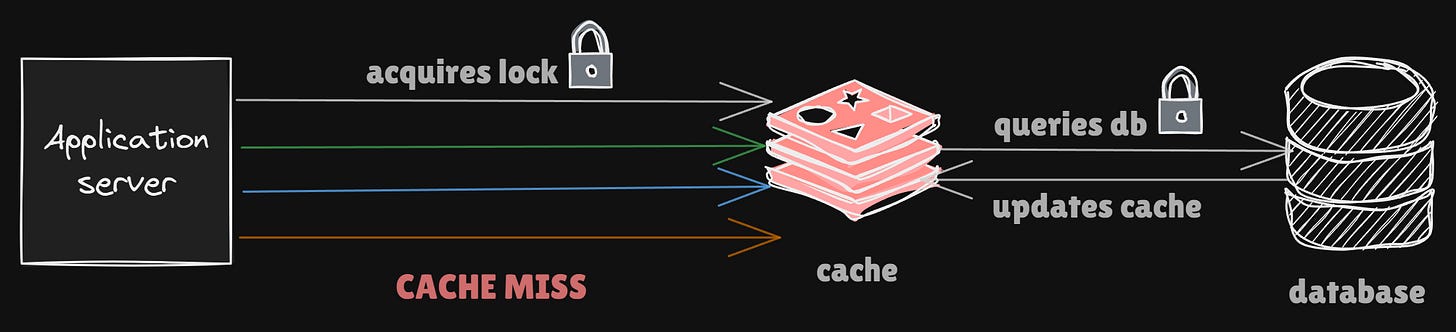

Tackling the Thundering Herd problem

Locking (Mutex)

When a cache miss happens:

The first request acquires a lock (via Redis or mutex).

Others wait until the lock is released.

Only the first request fetches from the DB and populates the cache.

mu := sync.Mutex{}

func getUser(userID string) User {

mu.Lock()

defer mu.Unlock()

val, found := cache.Get(userID)

if found {

return val

}

val = db.Query(userID)

cache.Set(userID, val)

return val

}Works only in-process. For distributed systems, Redis or etcd-based distributed locks are used.

Singleflight

prevents duplicate function executions for the same key.

First request runs

db.Query.Other concurrent requests wait for the same result.

The result is shared with all waiters.

Prevents DB overload on concurrent cache misses.

var g singleflight.Group

func getUser(userID string) (User, error) {

val, err, _ := g.Do(userID, func() (any, error) {

user := db.Query(userID)

cache.Set(userID, user)

return user, nil

})

return val.(User), err

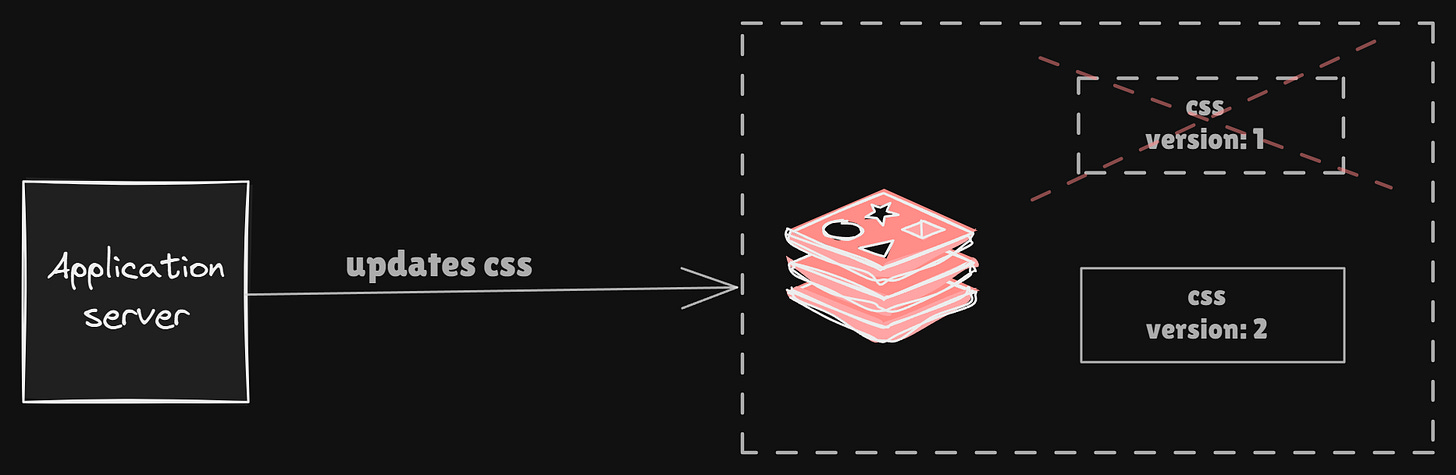

}Versioning/Key-based invalidation

Technique:

Append version/timestamp to cache key:

css:123:v7→ next update →css:123:v8

Pros:

Immutable-style caching (no deletes needed).

Cons:

Old keys pile up

Requires reference-counting or TTL for cleanup.

Best practice:

Ideal for immutable assets like

cssIn microservices, it can help avoid coordination on eviction

In Brief

Cache invalidation may be hard, but not unsolvable. The key lies in choosing the right strategy for the use case and understanding the trade-offs:

TTL-based caching is simple and effective when eventual consistency is acceptable.

Event-driven invalidation keeps the cache in sync with writes but requires strong coordination.

Write-through and write-back patterns offer consistency but differ in latency vs durability.

Cache-aside is widely used and flexible, but prone to thundering herd unless handled well.

Locking and singleflight help mitigate load spikes during cache misses.

Versioning-based invalidation is powerful for immutable or infrequently changing data.

Each approach shines in a different scenario, there’s no one-size-fits-all. We need to be explicit about our consistency requirements, read/write ratios, and system complexity before settling on a strategy.

Endnotes

Caching is not just about faster reads, it's about making trade-offs between performance, consistency, and complexity. A thoughtful caching layer can significantly improve the system’s reliability and speed, but only if we respect the complexities involved in invalidation.

That was all regarding caching (for now), we will look at something more interesting next week.

Stay tuned.