Building Truly Idempotent Systems

Request deduplication, transactional outbox, and consumers that don’t panic under retries

In the last post, we understood the intricacies of idempotent operations in backend design. We saw how idempotency keys are generated, how they are handled, and how it gets rid of “double payments” by looking at an e-commerce order system.

In this post, we will get a little deeper. We will look at how true idempotent operations are managed at scale when there are multiple services involved.

Cause when services go distributed, a simple idempotency key becomes an entirely different beast to handle and manage.

Let’s look at few issues we might face while making idempotent systems.

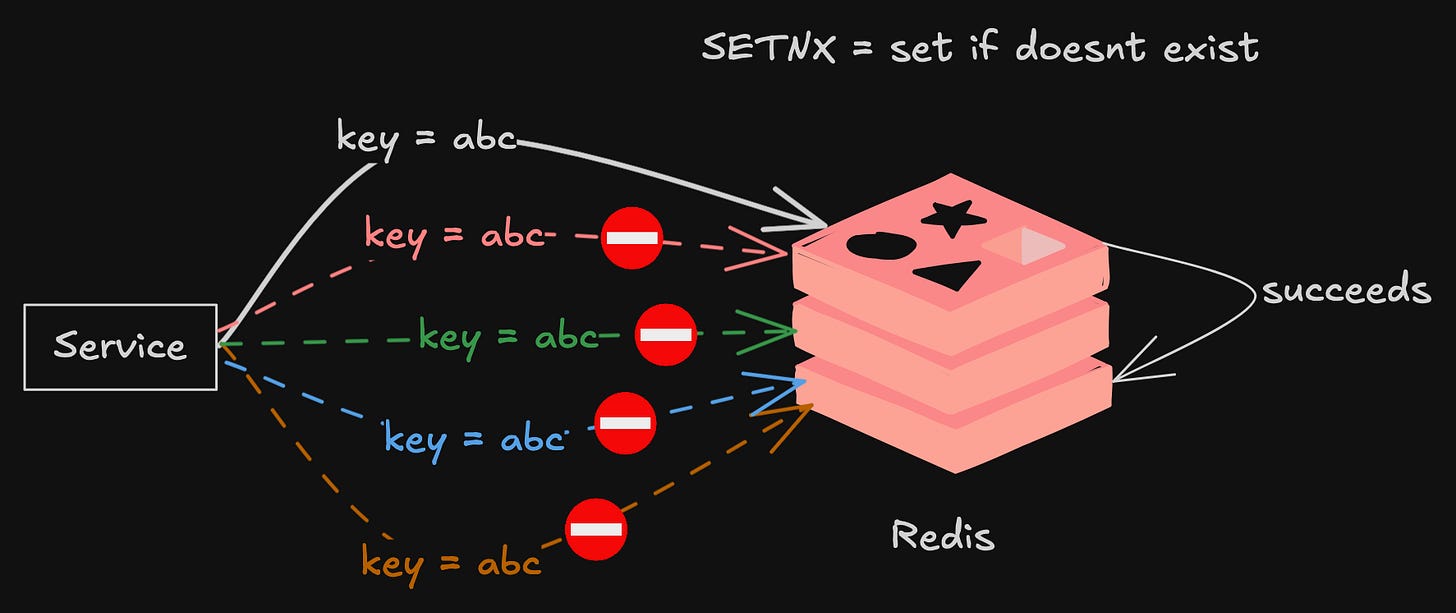

The Race Condition

Consider this sequence of events in a distributed system, without proper synchronization:

Request 1 arrives with

idempotency_key = ‘abc’.The payment service checks its store for

‘abc’. It doesn’t exist.A fraction of a second later, Request 2 arrives with the same key.

Request 2 also checks the store for

‘abc’. It still doesn’t exist because Request 1 hasn’t committed yet.Both requests proceed to call the payment gateway.

Both payment calls succeed, and the customer is double-charged.

This is the classic double-submit problem. The “simple check” if key doesn’t exist → process & store is an atomic operation on a single thread or a single process, but it falls apart in a distributed system where multiple services can process the same request concurrently.

The solution is not just a GET followed by a SET, but a single, atomic operation like SETNX (Set if Not Exists) in Redis, which is guaranteed to succeed only for the first caller.

The winning instance then processes the request, while the others fail the SETNX and immediately return an error or a stored result.

But this only solves the request level synchronization, let’s look at the event level problem we might face in our design.

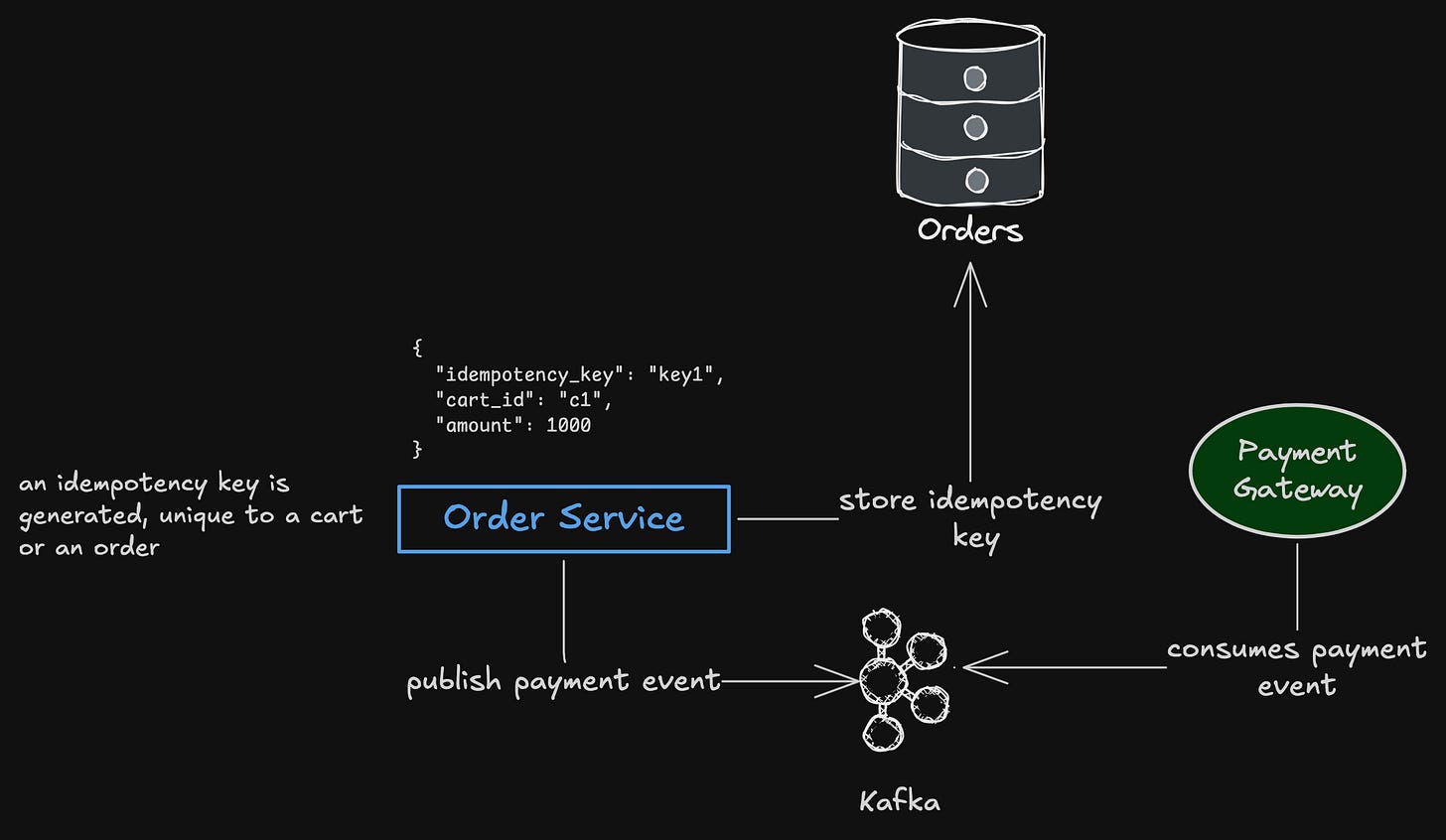

Generating a Key and Sending Event

Let’s recall something we did in the previous post for handling idempotent payment system.

Create

idempotency_keyatOrderService(it could be deterministic or a random key)Store that

idempotency_keyalong withordersin the tableSend the payment event with

idempotency_keyto a message broker to be consumed byPaymentService

There are 2 major things that needs to be done here, across different services and databases.

Store the

idempotency_keyin the databaseSend the payment event to

Kafka

Let’s look at a couple of ways we can achieve this.

Store in Database first, then send event

Fine, let’s make sure that the database transaction is committed before we send event. This way, we make sure that at least the key is stored in the database.

But there is a problem — what happens if the service crashes before sending the event to Kafka?

If that happens, the payment would not be initiated, and since we already stored the idempotency_key for the associated order in our database, we would be in an inconsistent state.

idempotency_key = keystore.Generate()

event = {

idempotency_key

}

resp, err = db.Insert(tx, idempotency_key)

if err != nil {

return HTTP.Status500

}

// what if service crash happens here?

event, err = publisher.Publish(event)Send event first, then store in database

I think you might have guessed what the problem here is — what happens if the transaction didn’t commit? We already sent the event with the key, but we failed to update the database with the order.

idempotency_key = keystore.Generate()

event = {

idempotency_key

}

event, err = publisher.Publish(event)

if err != nil {

return err

}

// what if service crashes after sending event?

resp, err = db.Insert(tx, idempotency_key)

if err != nil {

return HTTP.Status500

}Again, inconsistent state. So, what are the options?

Can we use distributed transactions?

Since we are working with distributed systems, we need a distributed transaction kind of a thing, right? I mean, it makes sense. That if one of the 2 operations failed, we can be sure to just rollback to the previous state.

But let’s see, if are trying to use a 2PC (Two phased commit) as a method for distributed transaction, we should notice that we are essentially using a 2PC between a database and service, which might not be viable. They won’t necessarily support 2PC.

Even if they support it, its just not a good practice to tightly couple a database and a service.

But let’s look at something clever.

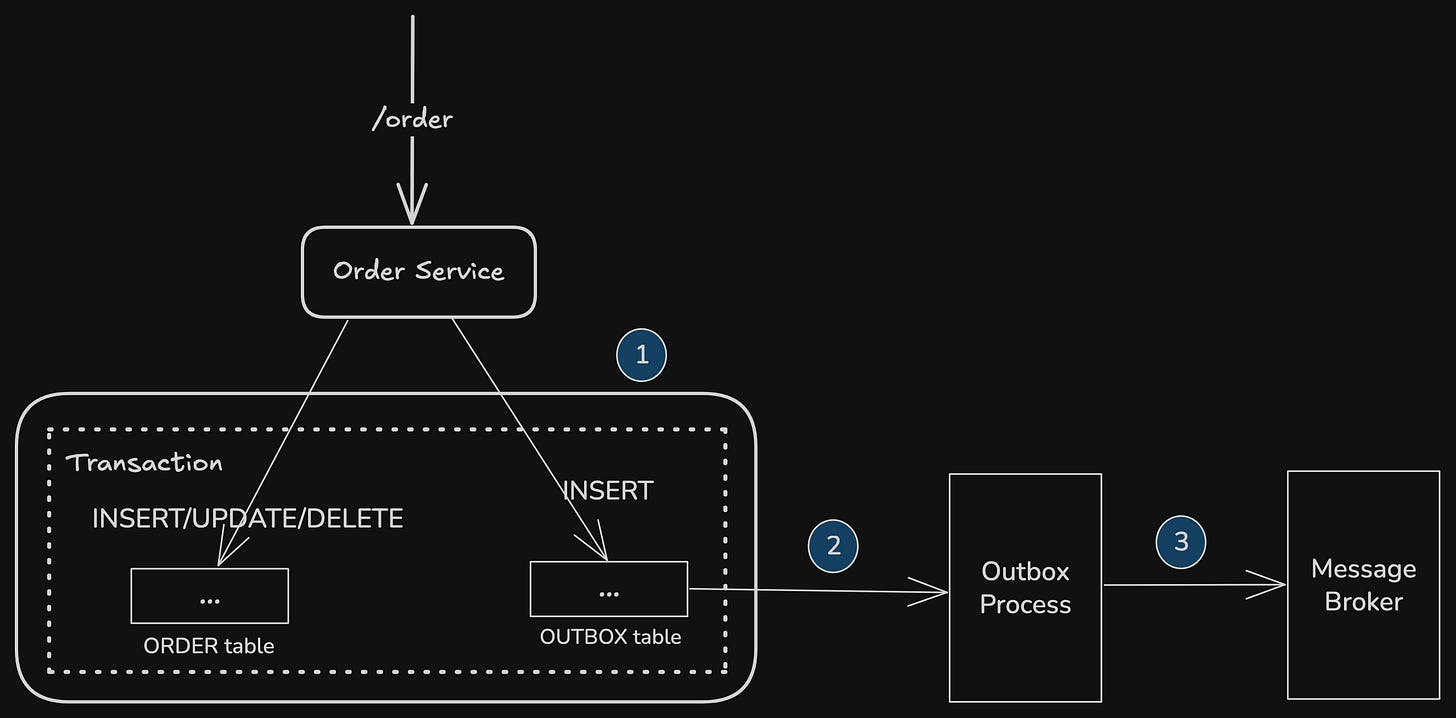

The Transactional Outbox Pattern

Let’s store the event data in some other table, let’s call it outbox table for now. Now, it becomes simple —

In the same transaction, first update the

ordertable, and thenoutboxtable.If any of the update fails, the whole transaction would be rolled back.

A separate

outboxprocess will listen to theoutboxtable, and pick entries from there, in the same order as they came, and send to Kafka

And what happens if outbox process fails? Well, we can just spin up a new process instead, and they can pick from where we left earlier.

It’s such a beautiful solution to a difficult problem.

The Outbox process that handles the publishing typically works in one of two ways:

Either a Polling Publisher that periodically scans the table for new records

Change Data Capture (CDC) tool that reads the database’s commit log directly, a near real-time publishing with minimal overhead

Drawback

The Outbox process might publish a message more than once. It might crash after publishing a message but before recording the fact that it has done so.

When it restarts, it will then publish the message again.

As a result, a message consumer must be idempotent, maybe by tracking the IDs of the messages that it has already processed.

At-least once vs Exactly once

As we saw above, when using a message broker, the events are delivered “at-least once”. And this means that the consumers will have to be designed in an idempotent way.

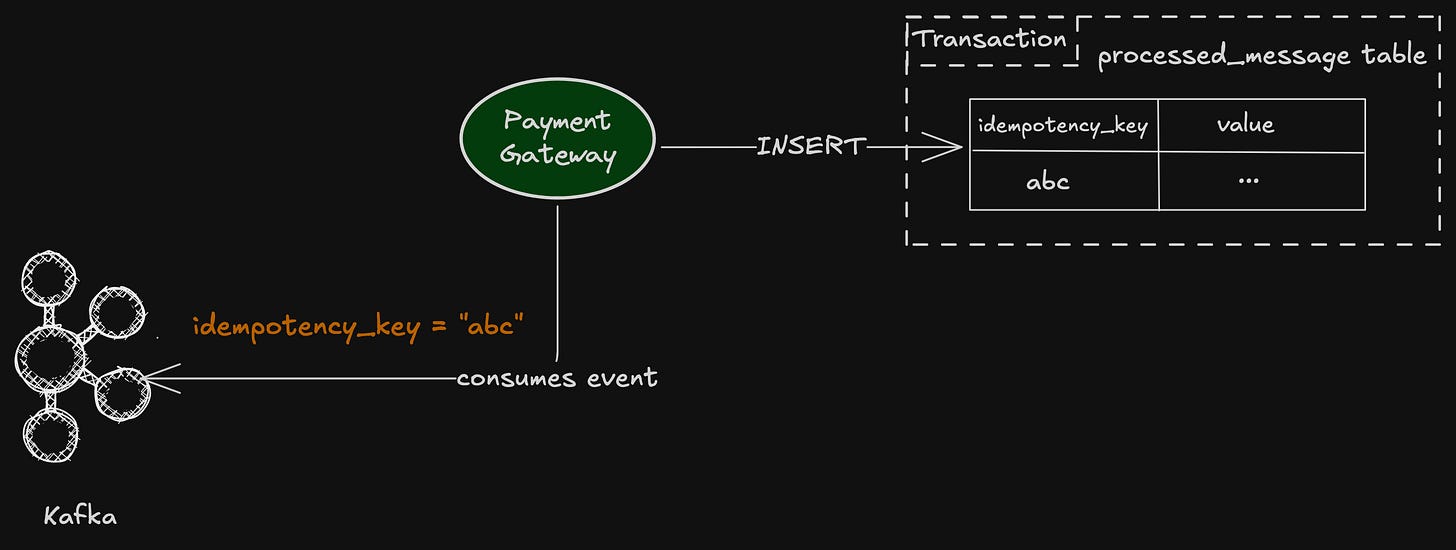

We make a consumer idempotent by having it record the IDs of processed messages in the database. When processing a message, a consumer can detect and discard duplicates by querying the database.

After starting the database transaction, the message handler inserts the message’s ID into the processed_message table. Since the idempotency_key is the processed_message table’s primary key the INSERT will fail if the message has been already processed successfully. The message handler can then rollback the transaction and ignore the message.

Wrapping Up

So where does this leave us?

Idempotency at scale is never simple. We need layers:

Request-level deduplication so that two services don’t face race conditions

Transactional outbox to make sure events and database writes move together, no matter what

Idempotent consumers to safely handle the “at-least once” reality of distributed messaging

None of these alone is enough. Together, they form the pillars that lets the system handle retries, crashes, and concurrency without charging a customer twice or leaving orders half-finished.

That’s the real lesson here: idempotency isn’t a one-liner in code, it’s a design philosophy we put into every layer of the system.

A Message

I’ve been thinking a lot about how writers monetize their content in different ways, including paywalls. That’s not what I want for this space.

Everything I write here will stay free, that’s important to me. At the same time, writing takes time, solitude, and energy.

If you have ever felt that my work helped you in some way, and you want to support it, you can do that here —